BERT-Large: Prune Once for DistilBERT Inference Performance - Neural Magic

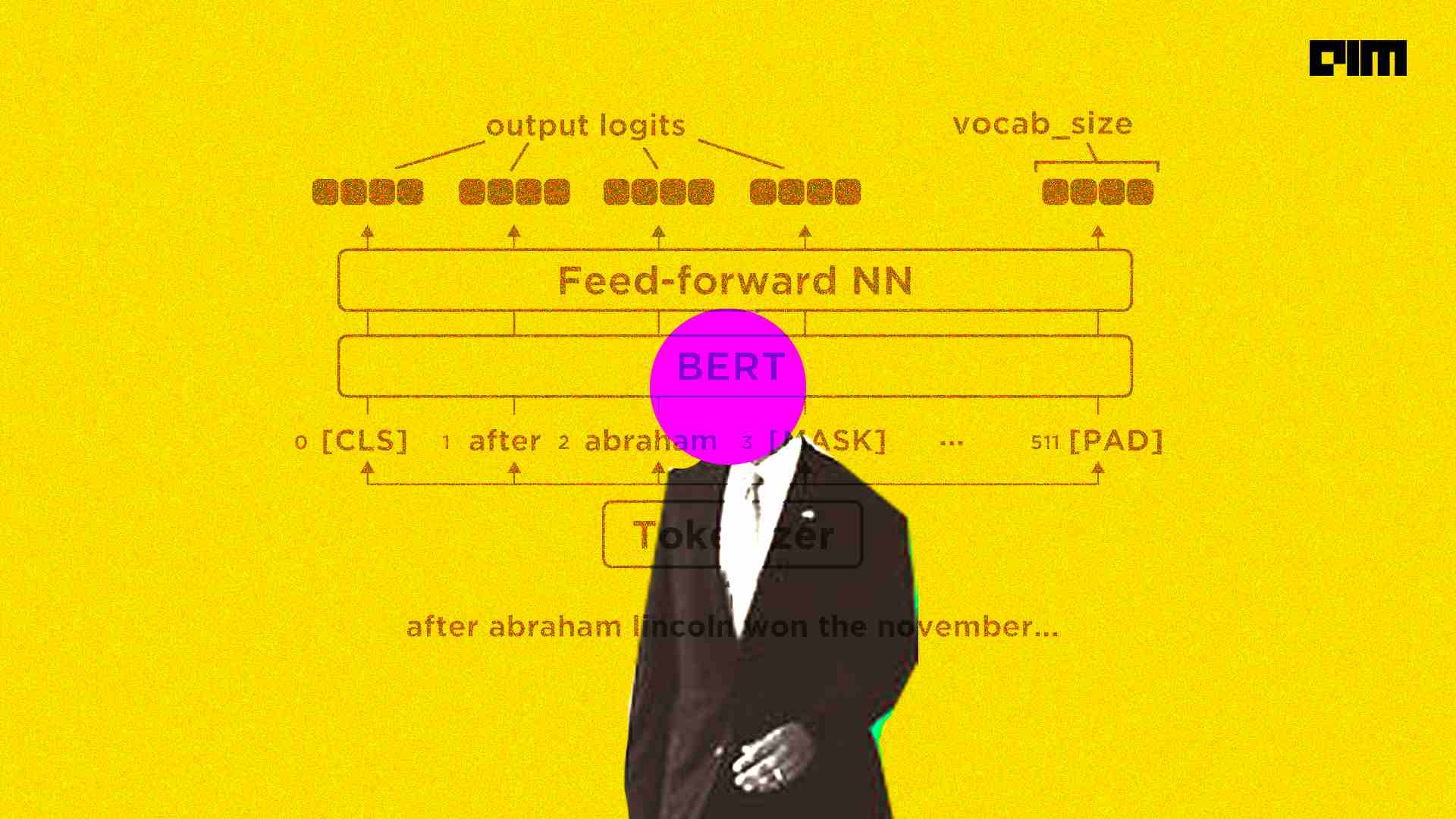

How to Compress Your BERT NLP Models For Very Efficient Inference

Large Language Models: DistilBERT — Smaller, Faster, Cheaper and Lighter, by Vyacheslav Efimov

Learn how to use pruning to speed up BERT, The Rasa Blog

Running Fast Transformers on CPUs: Intel Approach Achieves Significant Speed Ups and SOTA Performance

Neural Magic open sources a pruned version of BERT language model

Our paper accepted at NeurIPS Workshop on Diffusion Models, kevin chang posted on the topic

BERT-Large: Prune Once for DistilBERT Inference Performance - Neural Magic

Tuan Nguyen on LinkedIn: Faster, Smaller, and Cheaper YOLOv5

Running Fast Transformers on CPUs: Intel Approach Achieves Significant Speed Ups and SOTA Performance

oBERT: GPU-Level Latency on CPUs with 10x Smaller Models