Iclr2020: Compression based bound for non-compressed network

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network - Download as a PDF or view online for free

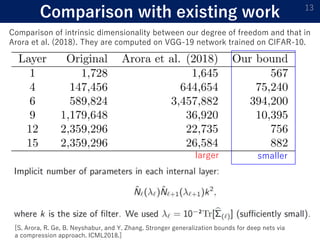

1) The document presents a new compression-based bound for analyzing the generalization error of large deep neural networks, even when the networks are not explicitly compressed.

2) It shows that if a trained network's weights and covariance matrices exhibit low-rank properties, then the network has a small intrinsic dimensionality and can be efficiently compressed.

3) This allows deriving a tighter generalization bound than existing approaches, providing insight into why overparameterized networks generalize well despite having more parameters than training examples.

NeurIPS2020 (spotlight)] Generalization bound of globally optimal non convex neural network training: Transportation map estimation by infinite dimensional Langevin dynamics

Peter Richtarik

On the Resilience of Deep Learning for reduced-voltage FPGAs

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

Heterogeneous graphlets-guided network embedding via eulerian-trail-based representation - ScienceDirect

Threats, attacks and defenses to federated learning: issues, taxonomy and perspectives, Cybersecurity

PDF] Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

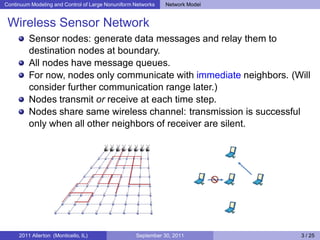

Continuum Modeling and Control of Large Nonuniform Networks

Papers Accepted to ICLR 2020

CNN for modeling sentence